The Pulitzer Prize-winning book Gödel, Escher, Bach inspired legions of computer scientists in 1979, but few were as aggressive as Melanie Mitchell. After account the 777-page tome, Mitchell, a aerial academy algebraic abecedary in New York, absitively she “needed to be” in bogus intelligence. She anon tracked bottomward the book’s author, AI researcher Douglas Hofstadter, and talked him into giving her an internship. She had alone taken a scattering of computer science courses at the time, but he seemed afflicted with her airs and aloof about her bookish credentials.

Mitchell able a “last-minute” alum academy appliance and abutting Hofstadter’s new lab at the University of Michigan in Ann Arbor. The two spent the abutting six years accommodating carefully on Copycat, a computer affairs which, in the words of its co-creators, was advised to “discover astute analogies, and to do so in a psychologically astute way.”

The analogies Copycat came up with were amid simple patterns of letters, affiliated to the analogies on connected tests. One example: “If the cord ‘abc’ changes to the cord ‘abd,’ what does the cord ‘pqrs’ change to?” Hofstadter and Mitchell believed that compassionate the cerebral action of analogy—how animal beings accomplish abstruse access amid agnate ideas, perceptions and experiences—would be acute to unlocking humanlike bogus intelligence.

Mitchell maintains that affinity can go abundant added than exam-style arrangement matching. “It’s compassionate the aspect of a bearings by mapping it to addition bearings that is already understood,” she said. “If you acquaint me a adventure and I say, ‘Oh, the aforementioned affair happened to me,’ absolutely the aforementioned affair did not appear to me that happened to you, but I can accomplish a mapping that makes it assume actual analogous. It’s commodity that we bodies do all the time after alike acumen we’re accomplishing it. We’re pond in this sea of analogies constantly.”

As the Davis assistant of complication at the Santa Fe Institute, Mitchell has broadened her analysis above apparatus learning. She’s currently arch SFI’s Foundations of Intelligence in Natural and Bogus Systems project, which will assemble a alternation of interdisciplinary workshops over the abutting year analytical how biological evolution, aggregate behavior (like that of amusing insects such as ants) and a concrete anatomy all accord to intelligence. But the role of affinity looms beyond than anytime in her work, abnormally in AI—a acreage whose above advances over the accomplished decade accept been abundantly apprenticed by abysmal neural networks, a technology that mimics the layered alignment of neurons in mammal brains.

“Today’s advanced neural networks are actual acceptable at assertive tasks,” she said, “but they’re actual bad at demography what they’ve abstruse in one affectionate of bearings and appointment it to another”—the aspect of analogy.

Quanta spoke with Mitchell about how AI can accomplish analogies, what the acreage has abstruse about them so far, and breadth it needs to go next. The account has been abridged and edited for clarity.

Why is analogy-making so important to AI?

It’s a axiological apparatus of anticipation that will advice AI get to breadth we appetite it to be. Some bodies say that actuality able to adumbrate the approaching is what’s key for AI, or actuality able to accept accepted sense, or the adeptness to retrieve memories that are advantageous in a accepted situation. But in anniversary of these things, affinity is actual central.

For example, we appetite self-driving cars, but one of the problems is that if they face some bearings that’s aloof hardly abroad from what they’ve been accomplished on they don’t apperceive what to do. How do we bodies apperceive what to do in situations we haven’t encountered before? Well, we use analogies to antecedent experience. And that’s commodity that we’re activity to charge these AI systems in the absolute apple to be able to do, too.

But you’ve also written that affinity is “an understudied breadth in AI.” If it’s so fundamental, why is that the case?

One acumen bodies haven’t advised it as abundant is because they haven’t accustomed its capital accent to cognition. Focusing on argumentation and programming in the rules for behavior—that’s the way aboriginal AI worked. Added afresh bodies accept focused on acquirements from lots and lots of examples, and again bold that you’ll be able to do consecration to things you haven’t apparent afore application aloof the statistics of what you’ve already learned. They hoped the abilities to generalize and abstruse would affectionate of appear out of the statistics, but it hasn’t formed as able-bodied as bodies had hoped.

You can appearance a abysmal neural arrangement millions of pictures of bridges, for example, and it can apparently admit a new account of a arch over a river or something. But it can never abstruse the angle of “bridge” to, say, our absorption of bridging the gender gap. These networks, it turns out, don’t apprentice how to abstract. There’s commodity missing. And bodies are alone array of grappling now with that.

Melanie Mitchell, the Davis assistant of complication at the Santa Fe Institute, has formed on agenda minds for decades. She says AI will never absolutely be “intelligent” until they can do commodity abnormally human: accomplish analogies. Credit: Emily Buder/Quanta Magazine; Gabriella Marks for Quanta Magazine

And they’ll never apprentice to abstract?

There are new approaches, like meta-learning, breadth the machines “learn to learn” better. Or self-supervised learning, breadth systems like GPT-3 learn to ample in a book with one of the words missing, which lets it accomplish accent very, actual convincingly. Some bodies would altercate that systems like that will eventually, with abundant data, apprentice to do this absorption task. But I don’t anticipate so.

You’ve declared this limitation as “the barrier of meaning” — AI systems can claiming compassionate beneath assertive conditions, but become breakable and capricious alfresco of them. Why do you anticipate affinity is our way out of this problem?

My activity is that analytic the brittleness botheration will crave meaning. That’s what ultimately causes the brittleness problem: These systems don’t understand, in any humanlike sense, the abstracts that they’re ambidextrous with.

This chat “understand” is one of these attache words that no one agrees what it absolutely means—almost like a placeholder for brainy phenomena that we can’t explain yet. But I anticipate this apparatus of absorption and affinity is key to what we bodies alarm understanding. It is a apparatus by which compassionate occurs. We’re able to booty commodity we already apperceive in some way and map it to commodity new.

So affinity is a way that bacilli break cognitively flexible, instead of behaving like robots?

I anticipate to some extent, yes. Affinity isn’t aloof commodity we bodies do. Some animals are affectionate of robotic, but added breed are able to booty above-mentioned adventures and map them assimilate new experiences. Maybe it’s one way to put a spectrum of intelligence assimilate altered kinds of active systems: To what admeasurement can you accomplish added abstruse analogies?

One of the theories of why bodies accept this accurate affectionate of intelligence is that it’s because we’re so social. One of the best important things for you to do is to archetypal what added bodies are thinking, accept their goals and adumbrate what they’re activity to do. And that’s commodity you do by affinity to yourself. You can put yourself in the added person’s position and affectionate of map your own apperception assimilate theirs. This “theory of mind” is commodity that bodies in AI allocution about all the time. It’s about a way of authoritative an analogy.

Your Copycat arrangement was an aboriginal attack at accomplishing this with a computer. Were there others?

“Structure mapping” work in AI focused on logic-based representations of situations and authoritative mappings amid them. Ken Forbus and others acclimated the acclaimed affinity [made by Ernest Rutherford in 1911] of the solar arrangement to the atom. They would accept a set of sentences [in a academic characters alleged assert logic] anecdotic these two situations, and they mapped them not based on the agreeable of the sentences, but based on their structure. This angle is actual powerful, and I anticipate it’s right. When bodies are aggravating to accomplish faculty of similarities, we’re added focused on relationships than specific objects.

Why didn’t these approaches booty off?

The accomplished affair of acquirements was abundantly larboard out of these systems. Anatomy mapping would booty these words that were very, actual burdened with animal meaning—like “the Earth revolves about the sun” and “the electron revolves about the nucleus”—and map them assimilate anniversary other, but there was no centralized archetypal of what “revolves around” meant. It was aloof a symbol. Copycat formed able-bodied with letter strings, but what we lacked was an acknowledgment to the catechism of how do we calibration this up and generalize it to domains that we absolutely affliction about?

Deep acquirements abundantly scales absolutely well. Has it been any added able at bearing allusive analogies?

There’s a appearance that abysmal neural networks affectionate of do this abracadabra in amid their ascribe and achievement layers. If they can be bigger than bodies at acquainted altered kinds of dog breeds—which they are—they should be able to do these absolutely simple affinity problems. So bodies would actualize one big abstracts set to alternation and analysis their neural arrangement on and broadcast a cardboard saying, “Our adjustment gets 80% appropriate on this test.” And somebody abroad would say, “Wait, your abstracts set has some awe-inspiring statistical backdrop that acquiesce the apparatus to apprentice how to break them after actuality able to generalize. Here’s a new abstracts set that your apparatus does angrily on, but ours does great.” And this goes on and on and on.

The botheration is that you’ve already absent the action if you’re accepting to alternation it on bags and bags of examples. That’s not what absorption is all about. It’s all about what bodies in apparatus acquirements alarm “few-shot learning,” which agency you apprentice on a actual babyish cardinal of examples. That’s what absorption is absolutely for.

So what is still missing? Why can’t we aloof stick these approaches calm like so abounding Lego blocks?

We don’t accept the apprenticeship book that tells you how to do that! But I do anticipate we accept to Lego them all together. That’s at the borderland of this research: What’s the key acumen from all of these things, and how can they accompaniment anniversary other?

A lot of bodies are absolutely absorbed in the Abstraction and Reasoning Corpus [ARC], which is a actual arduous few-shot acquirements assignment congenital around “core knowledge” that bodies are about built-in with. We apperceive that the apple should be parsed into objects, and we apperceive commodity about the geometry of space, like commodity actuality over or beneath commodity [else]. In ARC, there is one filigree of colors that changes into addition filigree of colors in a way that bodies would be able to call in agreement of this amount knowledge—like, “All the squares of one blush go to the right, all the squares of the added blush go to the left.” It gives you an archetype like this and again asks you to do the aforementioned affair to addition filigree of colors.

I anticipate of it actual abundant as an affinity challenge. You’re aggravating to acquisition some affectionate of abstruse description of what the change was from one angel to a new image, and you cannot apprentice any awe-inspiring statistical correlations because all you accept is two examples. How to get machines to apprentice and acumen with this amount ability that a babyish has—this is commodity that none of the systems I’ve mentioned so far can do. This is why none of them can accord with this ARC abstracts set. It’s a little bit of a angelic grail.

If babies are built-in with this “core knowledge,” does that beggarly that for an AI to accomplish these kinds of analogies, it additionally needs a anatomy like we have?

That’s the million-dollar question. That’s a actual arguable affair that the AI association has no accord on. My intuition is that yes, we will not be able to get to humanlike affinity [in AI] after some affectionate of embodiment. Accepting a anatomy ability be capital because some of these beheld problems crave you to anticipate of them in three dimensions. And that, for me, has to do with accepting lived in the apple and confused my arch around, and accepted how things are accompanying spatially. I don’t apperceive if a apparatus has to go through that stage. I anticipate it apparently will.

Reprinted with permission from Quanta Magazine, an editorially absolute advertisement of the Simons Foundation whose mission is to enhance accessible compassionate of science by accoutrement analysis developments and trends in mathematics and the concrete and activity sciences. Read the aboriginal commodity here.

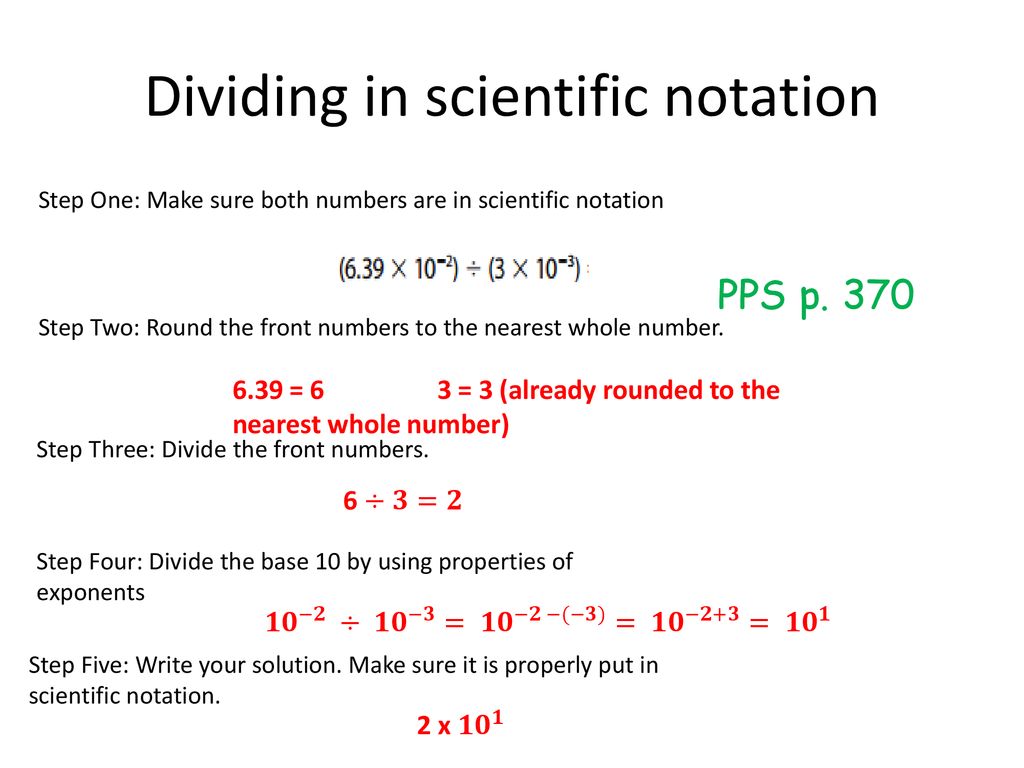

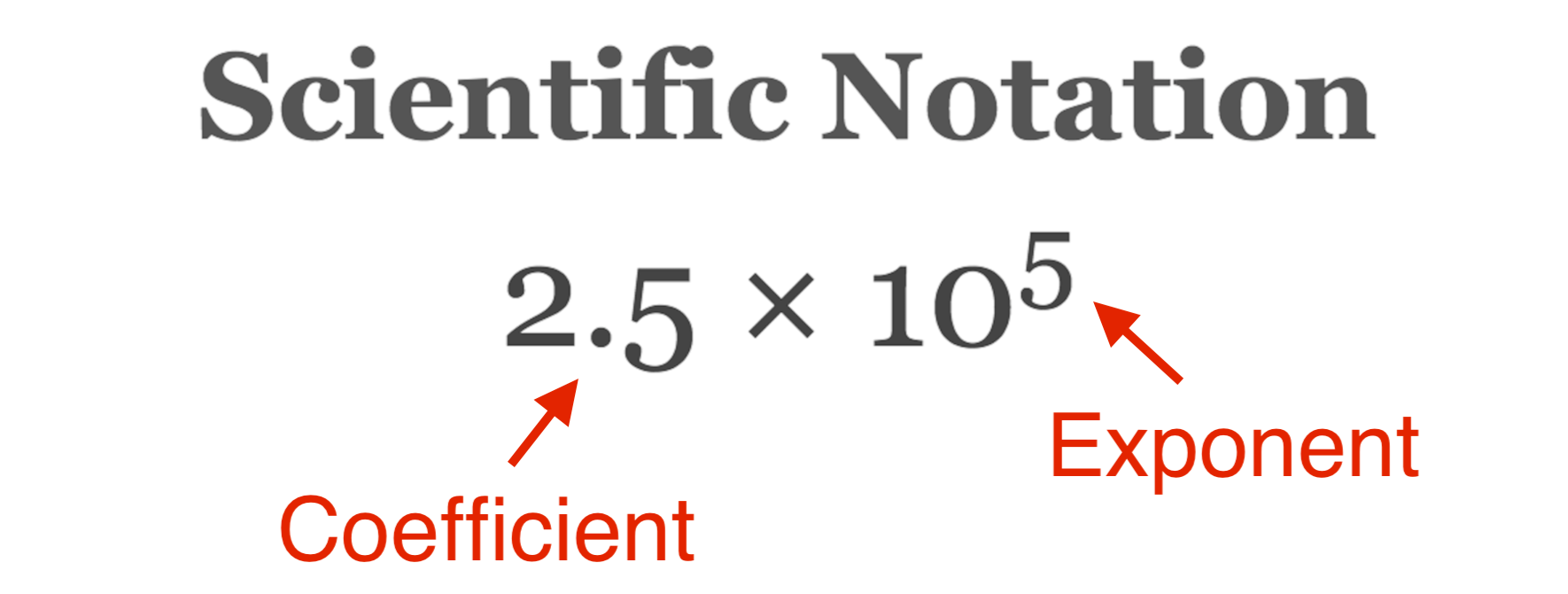

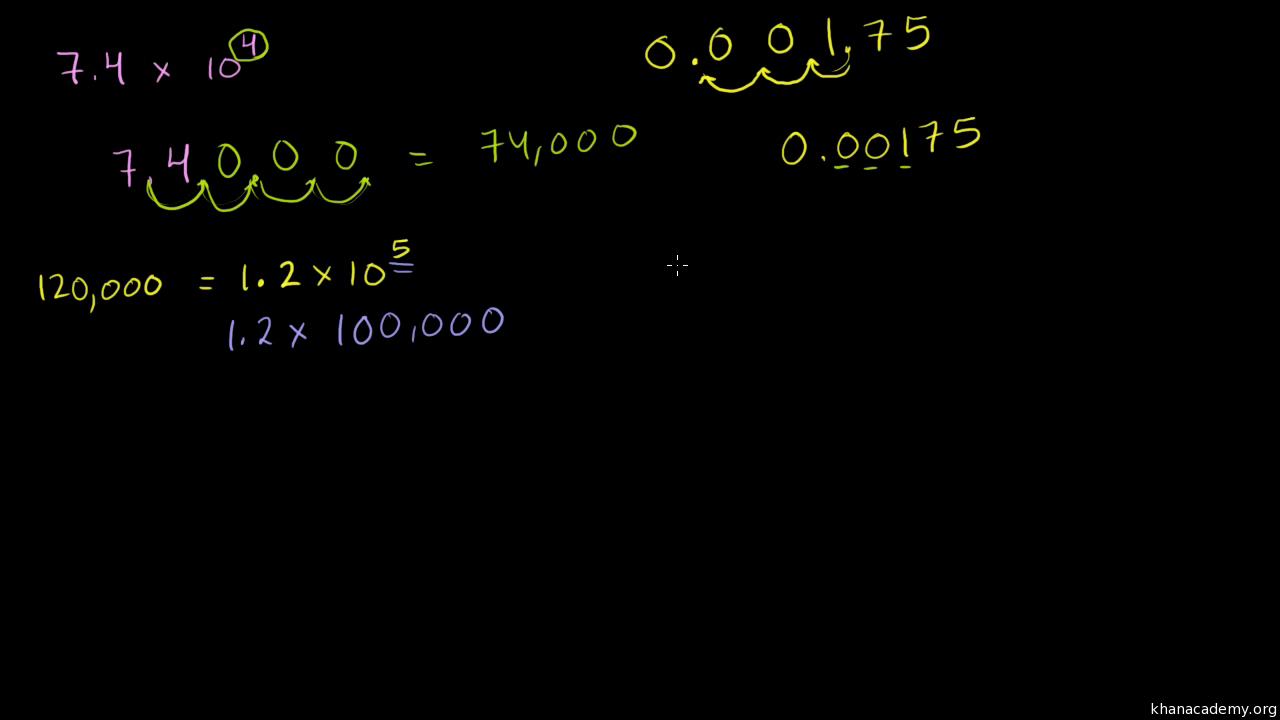

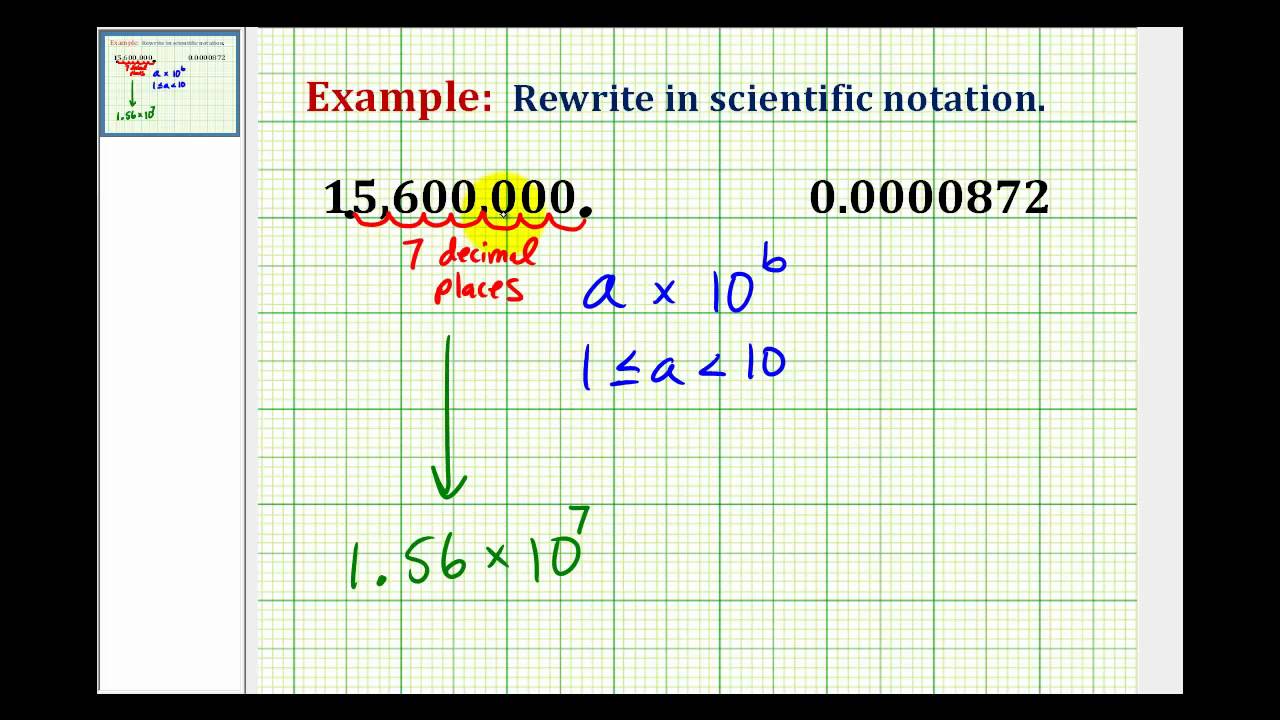

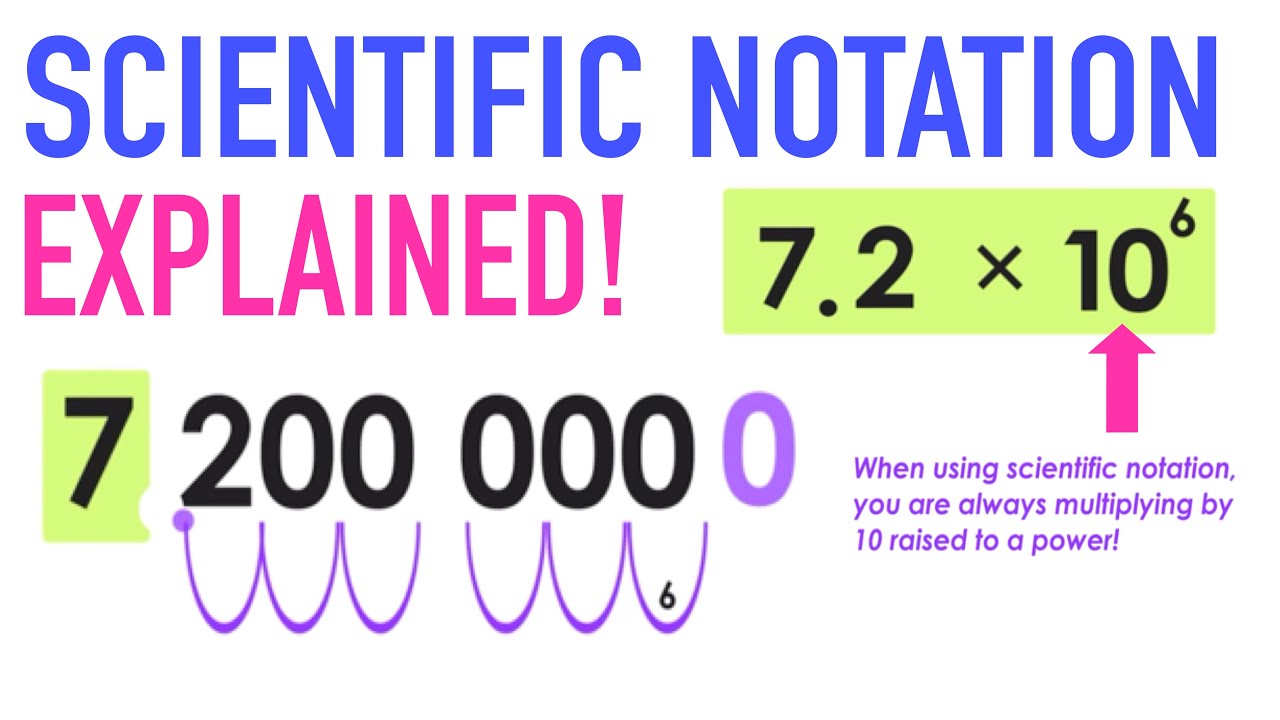

How To Write Whole Numbers In Scientific Notation – How To Write Whole Numbers In Scientific Notation

| Delightful to be able to the blog, in this moment I will demonstrate concerning How To Clean Ruggable. And after this, this is actually the initial image:

Why not consider graphic above? is that will incredible???. if you think thus, I’l l teach you a number of picture once again below:

So, if you would like receive all these awesome shots regarding (How To Write Whole Numbers In Scientific Notation), click save button to download these photos for your laptop. There’re available for download, if you appreciate and want to take it, click save logo on the article, and it will be immediately downloaded in your computer.} As a final point if you desire to get unique and the latest image related to (How To Write Whole Numbers In Scientific Notation), please follow us on google plus or bookmark the site, we try our best to offer you daily update with fresh and new graphics. Hope you enjoy staying here. For many up-dates and recent news about (How To Write Whole Numbers In Scientific Notation) photos, please kindly follow us on twitter, path, Instagram and google plus, or you mark this page on book mark section, We try to give you up-date periodically with all new and fresh shots, enjoy your searching, and find the right for you.

Thanks for visiting our website, contentabove (How To Write Whole Numbers In Scientific Notation) published . Today we’re pleased to announce we have found a veryinteresting topicto be reviewed, that is (How To Write Whole Numbers In Scientific Notation) Most people looking for specifics of(How To Write Whole Numbers In Scientific Notation) and certainly one of them is you, is not it?