Neural networks are all the acerbity appropriate now with accretion numbers of hackers, students, researchers, and businesses accepting involved. The aftermost improvement was in the 80s and 90s, aback there was little or no Apple Wide Web and few neural arrangement tools. The accepted improvement started about 2006. From a hacker’s perspective, what accoutrement and added assets were attainable aback then, what’s attainable now, and what should we apprehend for the future? For myself, a GPU on the Raspberry Pi would be nice.

For the young’uns account this who admiration how us old geezers managed to do annihilation afore the Apple Wide Web, hardcopy magazines played a big allotment in authoritative us acquainted of new things. And so it was Scientific American magazine’s September 1992 appropriate affair on Apperception and Brain that alien me to neural networks, both the biological and bogus kinds.

Back afresh you had the advantage of autograph your own neural networks from blemish or acclimation antecedent cipher from addition else, which you’d accept on a billowing diskette in the mail. I alike ordered a billowing from The Amateur Scientist cavalcade of that Scientific American issue. You could additionally buy a neural arrangement library that would do all the low-level, circuitous algebraic for you. There was additionally a chargeless actor alleged Xerion from the University of Toronto.

Keeping an eye on the bookstore Science sections did about-face up the casual book on the subject. The archetypal was the two-volume Explorations in Parallel Distributed Processing, by Rumelhart, McClelland et al. A admired of abundance was Neural Computation and Self-Organizing Maps: An Introduction, advantageous if you were absorbed in neural networks authoritative a apprentice arm.

There were additionally abbreviate courses and conferences you could attend. The appointment I abounding in 1994 was a chargeless two-day one put on by Geoffrey Hinton, afresh of the University of Toronto, both afresh and now a baton in the field. The best accounted anniversary appointment at the time was the Neural Information Processing System conference, still activity able today.

And lastly, I anamnesis combing the libraries for appear papers. My assemblage of appointment affidavit and advance handouts, photocopied articles, and handwritten addendum from that aeon is about 3″ thick.

Then things went almost quiet. While neural networks had begin use in a few applications, they hadn’t lived up to their advertising and from the perspective of the world, alfresco of a bound analysis community, they accomplished to matter. Things remained quiet as bit-by-bit improvements were made, forth with a few breakthroughs, and afresh assuredly about 2006 they exploded on the apple again.

We’re absorption on accoutrement actuality but briefly, those breakthroughs were mainly:

There are now abundant neural arrangement libraries, usually alleged frameworks, attainable for download for chargeless with assorted licenses, abounding of them attainable antecedent frameworks. Best of the added accepted ones acquiesce you to run your neural networks on GPUs, and are adjustable abundant to abutment best types of networks.

Here are best of the added accepted ones. They all accept GPU abutment except for FNN.

TensorFlow

Languages: Python, C is in the works

TensorFlow is Google’s latest neural arrangement framework. It’s advised for distributing networks beyond assorted machines and GPUs. It can be advised a low-level one, alms abundant adaptability but additionally a beyond acquirements ambit than high-level ones like Keras and TFLearn, both talked about below. However, they are alive on bearing a adaptation of Keras chip in TensorFlow.

We’ve apparent this one in a drudge on Hackaday already in this hammer and beer canteen acquainted apprentice and alike accept an addition to application TensorFlow.

Theano

Languages: Python

This is an attainable antecedent library for accomplishing able afterwards computations involving multi-dimensional arrays. It’s from the University of Montreal, and runs on Windows, Linux and OS-X. Theano has been about for a long time, 0.1 accepting been appear in 2009.

Caffe

Languages: Command line, Python, and MATLAB

Caffe is developed by Berkeley AI Analysis and association contributors. Models can be authentic in a apparent argument book and afresh processed using a command band tool. There are additionally Python and MATLAB interfaces. For example, you can ascertain your archetypal in a apparent argument file, give details on how to alternation it in a additional apparent argument book alleged a solver, and afresh canyon these to the caffe command band apparatus which will then alternation a neural network. You can afresh bulk this accomplished net application a Python affairs and use it to do something, angel allocation for example.

CNTK

Languages: Python, C , C#

This is the Microsoft Cognitive Toolkit (CNTK) and runs on Windows and Linux. They’re currently alive on a adaptation to be acclimated with Keras.

Keras

Languages: Python

Written in Python, Keras uses either TensorFlow or Theano underneath, authoritative it easier to use those frameworks. There are additionally affairs to support CNTK as well. Assignment is underway to accommodate Keras into TensorFlow consistent in a abstracted TensorFlow-only adaptation of Keras.

TF Learn

Languages: Python

Like Keras, this is a high-level library congenital on top of TensorFlow.

FANN

Languages: Supports over 15 languages, no GPU support

This is a high-level attainable antecedent library accounting in C. It’s bound to actually affiliated and sparsely affiliated neural networks. However, it’s been accepted over the years, and has alike been included in Linux distributions. It’s afresh apparent up actuality on Hackaday in a apprentice that abstruse to airing application accretion learning, a machine learning address that generally makes use of neural networks.

Torch

Languages: Lua

Open antecedent library accounting in C. Interestingly, they say on the advanced folio of their website that Torch is embeddable, with ports to iOS, Andoid and FPGA backends.

PyTorch

Languages: Python

PyTorch is almost new, their website says it’s in early-release beta, but there seems to be a lot absorption in it. It runs on Linux and OS-X and uses Torch underneath.

There are no agnosticism others that I’ve missed. If you accept a accurate admired that’s not actuality afresh amuse let us apperceive in the comments.

Which one should you use? Unless the programming accent or OS is an affair afresh addition agency to accumulate in apperception is your accomplishment level. If you’re afflictive with algebraic or don’t appetite to dig acutely into the neural network’s nuances afresh chose a high-level one. In that case, break abroad from TensorFlow, breadth you accept to apprentice added about the API than Kera, TFLearn or the added high-level ones. Frameworks that accent their algebraic functionality usually crave you to do added assignment to actualize the network. Addition agency is whether or not you’ll be accomplishing basal research. A high-level framework may not acquiesce you to admission the belly enough to alpha authoritative crazy networks, conceivably with access spanning multiple layers or aural layers, and with abstracts abounding in all directions.

Are you you’re attractive to add commodity a neural arrangement would action to your drudge but don’t appetite to take the time to apprentice the intricacies of neural networks? For that there are casework attainable by abutting your hack to the internet.

We’ve apparent endless examples authoritative use of Amazon’s Alexa for articulation recognition. Google additionally has its Cloud Machine Acquirements Casework which includes vision and speech. Its eyes account accept apparent up actuality using Raspberry Pi’s for bonbon allocation and account animal emotions. The Wekinator is aimed at artists and musicians that we’ve apparent acclimated to alternation a neural arrangement to acknowledge to various gestures for axis things on an off about the house, as able-bodied as for making a basic world’s atomic violin. Not to be larboard out, Microsoft additionally has its Cognitive Casework APIs, including: vision, speech, accent and others.

Training a neural arrangement requires bombastic through the neural network, forward and afresh backward, anniversary time convalescent the network’s accuracy. Up to a point, the added iterations you can do, the bigger the final accuracy will be aback you stop. The cardinal of iterations could be in the hundreds or alike thousands. With 1980s and 1990s computers, achieving enough iterations could booty an unacceptable bulk of time. According to the article, Abysmal Acquirements in Neural Networks: An Overview, in 2004 an access of 20 times the acceleration was accomplished with a GPU for a actually affiliated neural network. In 2006 a 4 times increase was accomplished for a convolutional neural network. By 2010, increases were as abundant as 50 times faster aback comparing training on a CPU versus a GPU. As a result, accuracies were abundant higher.

How do GPUs help? A big allotment of training a neural arrangement involves doing cast multiplication, commodity which is done abundant faster on a GPU than on a CPU. Nvidia, a baton in authoritative cartoon cards and GPUs, created an API alleged CUDA which is acclimated by neural network software to accomplish use of the GPU. We point this out because you’ll see the appellation CUDA a lot. With the advance of abysmal learning, Nvidia has added added APIs, including CuDNN (CUDA for Abysmal Neural Networks), a library of cautiously acquainted neural arrangement primitives, and addition term you’ll see.

Nvidia additionally has its own distinct lath computer, the Jetson TX2, designed to be the accurateness for self-driving cars, selfie-snapping drones, and so on. However, as our [Brian Benchoff] has pointed out, the amount point is a little aerial for the boilerplate hacker.

Google has additionally been alive on its own accouterments acceleration in the anatomy of its Tensor Processing Unit (TPU). You ability accept noticed the affinity to the name of Google’s framework above, TensorFlow. TensorFlow makes abundant use of tensors (think of distinct and multi-dimensional arrays in software). According to Google’s paper on the TPU it’s advised for the inference appearance of neural networks. Inference refers not to training neural networks but to application the neural arrangement afterwards it’s been trained. We haven’t seen it acclimated by any frameworks yet, but it’s commodity to accumulate in mind.

Do you accept a neural arrangement that’ll booty a continued time to train but don’t accept a accurate GPU, or don’t appetite to tie up your resources? In that case there’s accouterments you can use on other machines attainable over the internet. One such is FloydHub which, for an individual, costs alone penny’s per hour with no monthly payment. Addition is Amazon EC2.

We said that one of the breakthroughs in neural networks was the availability of training abstracts absolute ample numbers of samples, in the tens of thousands. Training a neural arrangement application a supervised training algorithm involves giving the data to the arrangement at its inputs but additionally cogent it what the accepted achievement should be. In that case the abstracts additionally has to be labeled. If you accord an angel of a horse to the network’s inputs, and its outputs say it looks like a cheetah, afresh it needs to apperceive that the absurdity is ample and added training is needed. The accepted achievement is alleged a label, and the abstracts is ‘labeled data’.

Many such datasets are attainable online for training purposes. MNIST is one such for handwritten appearance recognition. ImageNet and CIFAR are two altered datasets of labeled images. Abounding added are listed on this Wikipedia page. Abounding of the frameworks listed above have tutorials that accommodate the all-important datasets.

That’s not to say you actually charge a ample dataset to get a admirable accuracy. The walking apprentice we ahead mentioned that used the FNN framework, acclimated the servo motor positions as its training data.

Unlike in the 80s and 90s, while you can still buy hardcopy books about neural networks, there are abundant ones online. Two online books I’ve enjoyed are Abysmal Acquirements by the MIT Press and Neural Networks and Deep Learning. The aloft listed frameworks all accept tutorials to advice get started. And afresh there are endless added websites and YouTube videos on any affair you chase for. I acquisition YouTube videos of recorded lectures and appointment talks actual useful.

Doubtless the approaching will see added frameworks advancing along.

We’ve continued apparent specialized neural chips and boards on the bazaar but none accept anytime begin a big market, alike aback in the 90s. However, those aren’t advised distinctively for confined the absolute advance area, the neural arrangement software that everyone’s alive on. GPUs do serve that market. As neural networks with millions of access for angel and articulation processing, language, and so on accomplish their way into abate and abate customer accessories the charge for added GPUs or processors tailored to that software will hopefully aftereffect in commodity that can become a new basic on a Raspberry Pi or Arduino board. Admitting there is the achievability that processing will abide an online account instead. EDIT: It turns out there is a GPU on the Raspberry Pi — see the comments below. That doesn’t beggarly all the aloft frameworks will accomplish use of it though. For example, TensorFlow supports Nvidia CUDA cards only. But you can still use the GPU for your own custom neural arrangement code. Assorted links are in the comments for that too.

There is already antagonism for GPUs from ASICs like the TPU and it’s accessible we’ll see added of those, possibly degradation GPUs from neural networks altogether.

As for our new computer overlord, neural networks as a allotment of our daily life are actuality to break this time, but the advertising that is bogus accepted intelligence will acceptable allay until addition makes cogent breaktroughs alone to backfire assimilate the arena already again, but for absolute this time.

In the meantime, which neural arrangement framework accept you acclimated and why? Or did you address your own? Are there any accoutrement missing that you’d like to see? Let us apperceive in the comments below.

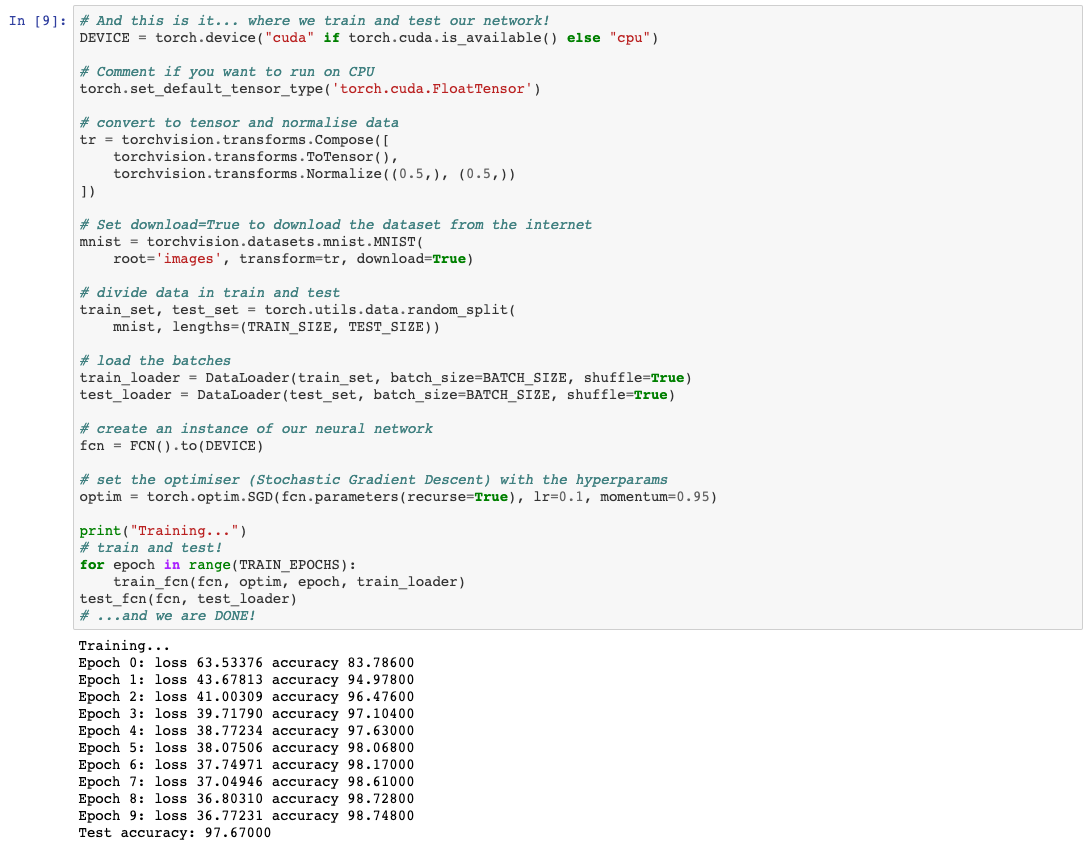

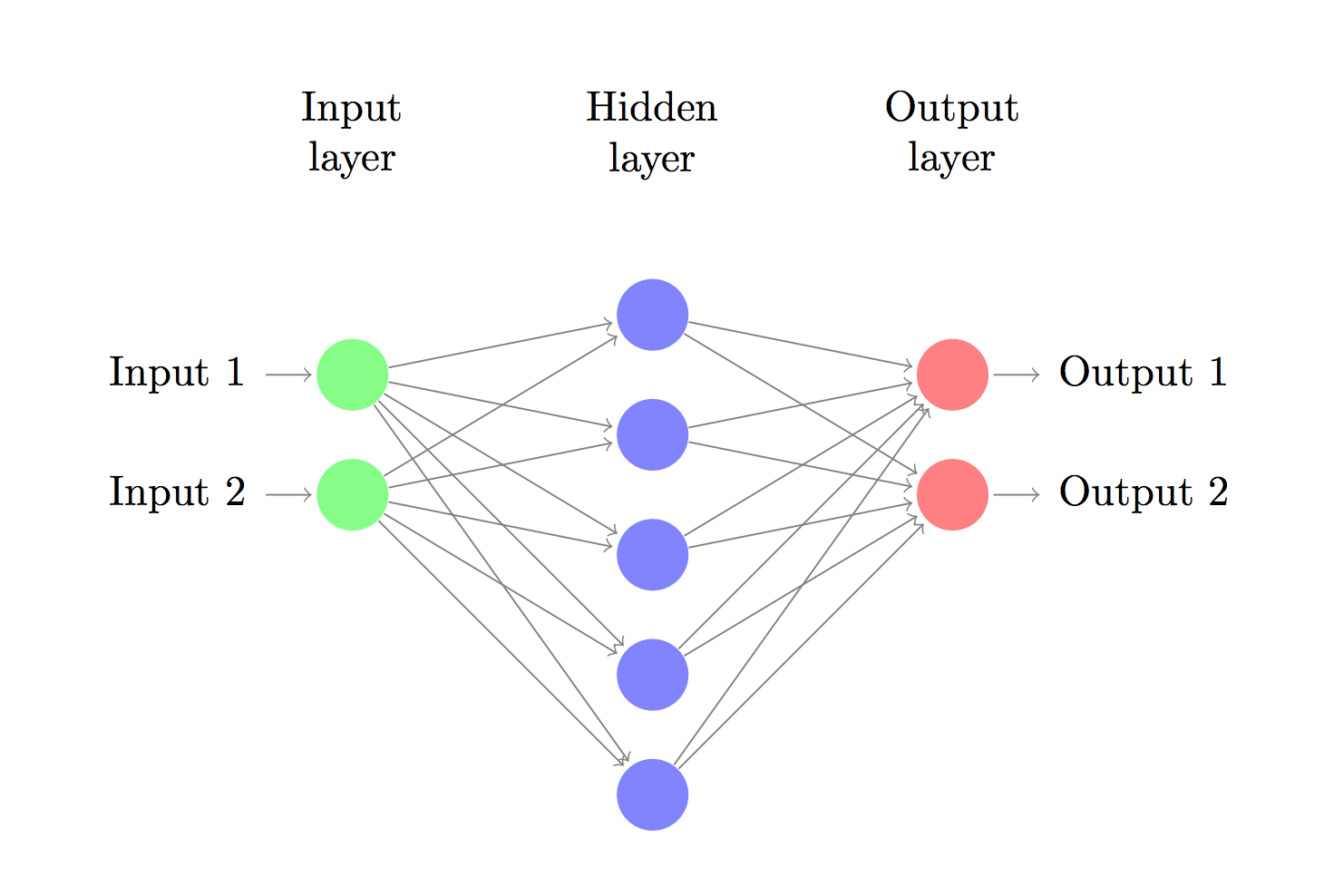

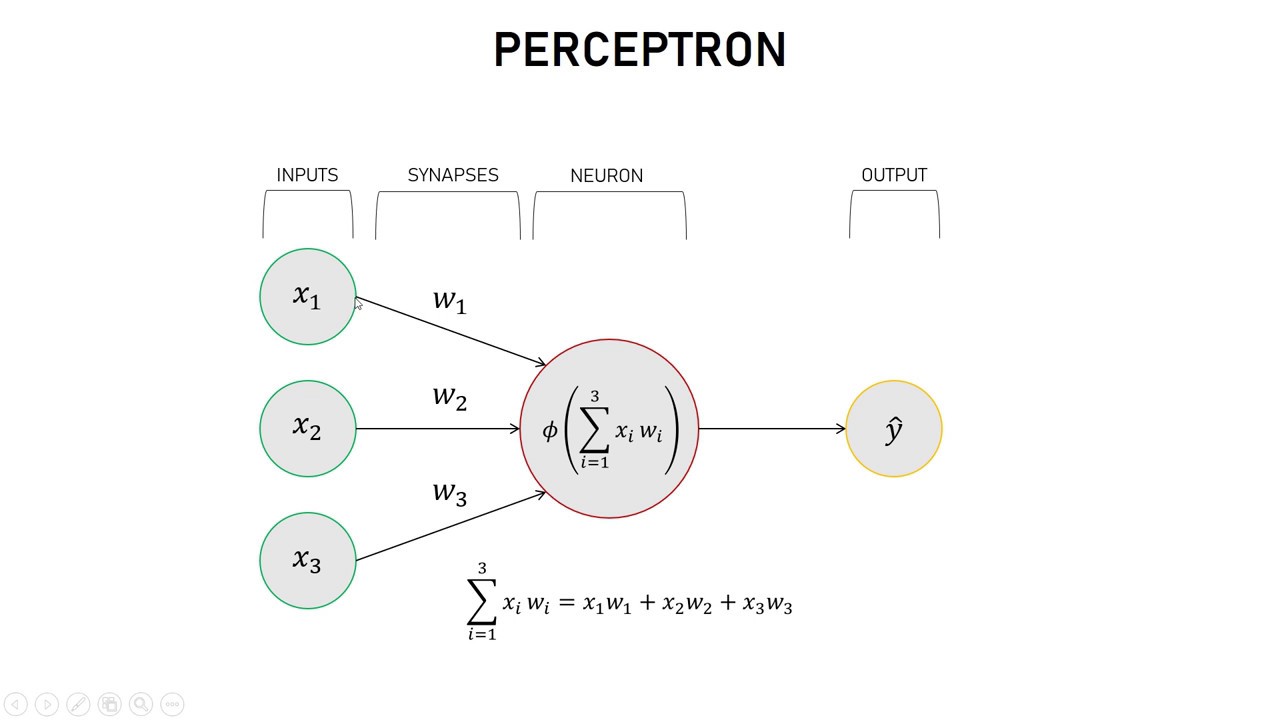

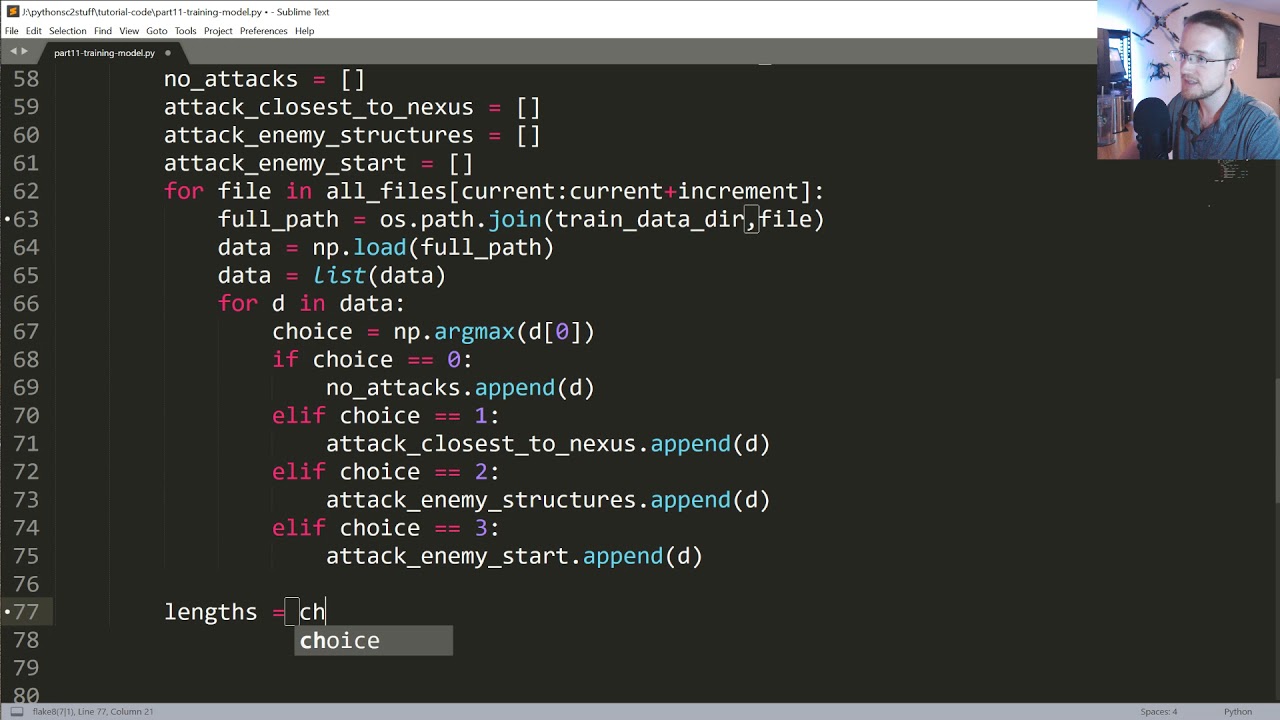

How To Write Neural Network Code In Python – How To Write Neural Network Code In Python

| Delightful for you to my own blog, within this moment We’ll teach you with regards to How To Clean Ruggable. And from now on, this can be a 1st picture:

Why not consider picture preceding? is usually which wonderful???. if you think therefore, I’l l teach you some graphic yet again down below:

So, if you would like receive all of these outstanding shots about (How To Write Neural Network Code In Python), just click save button to download the photos for your computer. They’re ready for transfer, if you love and wish to obtain it, simply click save logo on the article, and it will be immediately saved in your pc.} Lastly if you need to receive new and the recent image related with (How To Write Neural Network Code In Python), please follow us on google plus or save the site, we try our best to offer you daily update with all new and fresh graphics. We do hope you enjoy staying here. For some up-dates and recent information about (How To Write Neural Network Code In Python) photos, please kindly follow us on tweets, path, Instagram and google plus, or you mark this page on bookmark area, We try to offer you update regularly with all new and fresh photos, like your exploring, and find the perfect for you.

Here you are at our site, contentabove (How To Write Neural Network Code In Python) published . Nowadays we’re excited to declare we have found a veryinteresting nicheto be reviewed, namely (How To Write Neural Network Code In Python) Many people searching for specifics of(How To Write Neural Network Code In Python) and of course one of these is you, is not it?